Data available from the project

What will you find in this page?

- General description of the ATCO2 corpora and links of interest.

- Data description per-corpus released by ATCO2 project, including its per-airport splits and license.

- Brief information about how we collected, pre-processed and annotated the ATCO2 corpora.

- Additional metadata (radar, ADS-B) and data characteristics (such as English Language Score and Voice Activity detection) in ATCO2.

- Automatic Speech Recognition system with only ATCO2 corpora as supervised data.

- Named entity recognition and sequence classification systems with ATCO2 corpora.

I. General description about the ATCO2 corpora

This page presents more information about the datasets collected and open-sourced by the ATCO2 project. The corpora released by ATCO2 can be used for many speech and text-based machine learning (ML) tasks, including:

- Automatic speech recognition (ASR),

- Contextualized (ASR),

- English language detection on ATC speech,

- Speaker diarization,

- Named entity recognition (NER),

- Speaker Role detection, i.e., speech coming from pilot or Air Traffic Controllers.

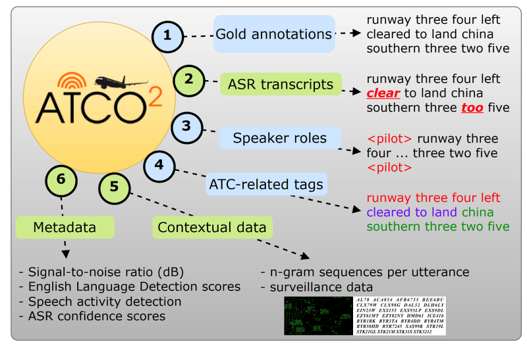

The figure below depicts the type of annotations offered by our corpus.

Find below some links of interests:

- Link to main paper (under review at DMLR Journal). Here, we describe more information about how the corpus was collected and some baselines of ML-based models that you can train with the ATCO2 corpora: https://arxiv.org/abs/2211.04054

- 2nd Application paper published at the Aerospace Journal: https://www.mdpi.com/2226-4310/10/10/898

II. Data released by ATCO2 project

The ATCO2 corpora is split into 3 main parts:

- Training set,

- 4h test set and

- 1h test subset (freely-available and downloadable below).

The training data

Consists of audio and raw metadata:

- Name: ATCO2-PL set,

- Overall size of the Training dataset is 5281 hours (English + non-English),

- 4’465 hours (English only),

- Overall raw size of audio files (sum of wav file lengths) is 6225 hours (English + non-English).

- Accessible via: https://catalogue.elra.info/en-us/repository/browse/ELRA-S0484/

License: Available for Commercial and Non-Commercial Use (see ELRA)

The test data

The official test data consist of:

- Name: ATCO2-test-set-4h,

- Gold annotations of 4 hours of speech,

- Pre-processing, annotations generation and verification was done via SpokenData (https://www.spokendata.com/atc),

- Accessible via: https://catalogue.elra.info/en-us/repository/browse/ELRA-S0484/

License: Available for Commercial and Non-Commercial Use (see ELRA)

Test subset

A sample test data for research purposes that consist of:

- Name: ATCO2-test-set-1h,

- Subset from the official 4-hour test set (above),

- This dataset was build only for development and evaluation of ASR engines for English ATC data,

- Accessible via: https://www.replaywell.com/atco2/download/ATCO2-ASRdataset-v1_beta.tgz

- The XML files corresponding to each audio file updated with manually verified tagging for the entities (e.g: callsign, command, values): atco2_1h_xml.tar.gz

License: available for research purposes

III. How did we collect and transcribe the ATCO corpora

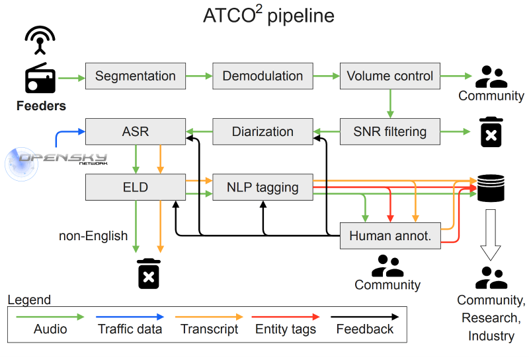

An overview of the data processing pipeline developed by the ATCO2 project and used to collect the ATCO2 corpus is depicted in the figure above. The data processing pipeline developed by our project consists of several steps:

- Speech pre-processing tools (segmentation, volume adjustment and discarding noisy recordings),

- Speaker diarization (split audio per-speaker),

- Automatic speech recognition,

- English language detection (ELD),

- Speaker role detection (SRD) e.g., ATCo or pilot, and

- Labeling of callsigns, commands and values with named entity recognition (NER).

ATCO2 utilized this pipeline to pre-process the ATCO2-PL-set corpus which is the training corpus and ATCO2-test-set corpus.

The ATCO2 corpus is publicly available in ELDA catalog at the following URL: http://catalog.elra.info/en-us/repository/browse/ELRA-S0484/.

Figure. Data collection and data-processing pipeline developed in ATCO2 project

IV. Additional Metadata and ATCO2 corpora characteristics information available as part of ACTO2 corpora

During the ATCO2 project, audio data was collected from radio receivers (feeders) placed near different airports worldwide. Simultaneously, we captured ADS-B (radar) data that we match with the audio recordings. This step is of special importance because it allows the ATCO2 corpora to be used for contextual ASR. In contextual ASR, we boost certain entities at decoding time, which can lead to benefits: i) reduced WER and ii) increased accuracy on entity detection, such as call-signs.

ADS-B data: Alongside audio and transcripts pairs for the training data, we also offer radar data (ADS-B) that is aligned to the target sample. For instance, the sample below shows the files available for the recording `LKPR_Tower_134_560MHz_20220119_185902`.

```

├── LKPR_Tower_134_560MHz_20220119_185902.boosting

├── LKPR_Tower_134_560MHz_20220119_185902.callsigns

├── LKPR_Tower_134_560MHz_20220119_185902.cnet_10_b15-13-400

├── LKPR_Tower_134_560MHz_20220119_185902.info

├── LKPR_Tower_134_560MHz_20220119_185902.segm

├── LKPR_Tower_134_560MHz_20220119_185902.wav

```

The files ending on “.callsign” and “.boosting” are: ADS-B data in ICAO format, e.g., ECC502 SWR115Z. The “boosting” file contains different verbalization for each callsign. We take the ICAO callsign and verbalize as, e.g., “eclair five zero two; eclair zero two; swiss one one five zulu; swiss one five zulu”.

Further information about the verbalization rules are in our papers:

- INTERSPEECH 2021: https://www.lsv.uni-saarland.de/wp-content/uploads/2022/07/Interspeech2021_ATCO2.pdf

- 9th OpenSky Network Symposium: https://www.mdpi.com/2673-4591/13/1/8

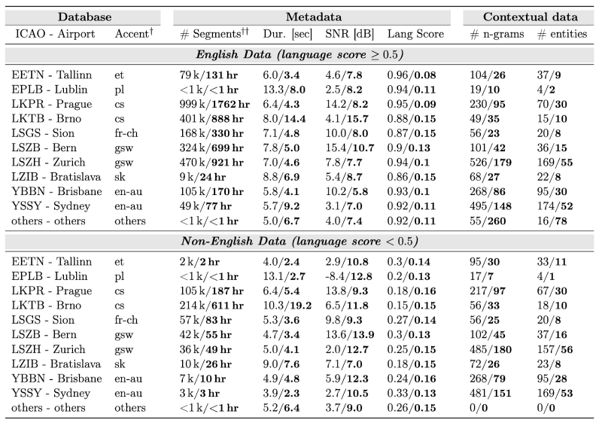

Additional characteristics available for ATCO2 corpora, per airport: In the table below, you can find some statistics about the collected databases per airport:

- Duration, SNR, language scores and contextual data columns report the mean and standard deviation (mean/std) per sample,

- The available data at ELDA provides timing information in RTTM format, which can be used for speaker diarization or VAD,

- The languages are abbreviated in IETF format for simplicity.

Table. Metadata about data collected by ATCO2

If you are interested in acquiring the ATCO2 dataset, you can check the table above to find out if the data you are seeking matches one of the Airports packages. Note that in most cases, you can select the data with language scores higher than 0.5, which partly ensures that the audio is in English.

The characteristics per airport can be easily exported to text files by running the preparation script from our GitHub repository: https://github.com/idiap/atco2-corpus/tree/main/data/databases/atco2_pl_set

V. Word Error Rates of ADR models trained with ATCO2 corpora

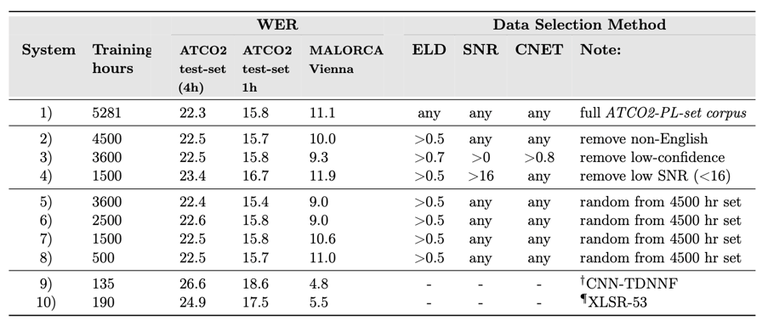

In the table below, you can find:

- Performance of hybrid-based ASR model (with Kaldi toolkit) with the ATCO2-PL corpus,

- Performance in terms of word error rates (WERs) for ‘out-of-domain’ datasets (see column “Malorca Vienna”),

- Performance on “in-domain” data, see the columns with “ATCO2-test-set-1h/4h”.

Table. Different ASR models trained with different amounts of ATCO2 data.

You can find more information, including WERs, in the following papers:

- Benchmark on ASR with different ATC datasets: https://www.isca-archive.org/interspeech_2020/zuluagagomez20_interspeech.html

- First ATCO2 paper: https://arxiv.org/abs/2211.04054

- Lessons Learned in ATCO2: https://www.mdpi.com/2226-4310/10/10/898

We also release a set of GitHub repositories:

- Get information about how to prepare the corpus with Kaldi toolkit: https://github.com/idiap/atco2-corpus

- How to fine-tune your Wav2Vec2 model with ATCO2 data: https://github.com/idiap/w2v2-air-traffic

- Text-based diarization with BERT: https://github.com/idiap/bert-text-diarization-atc

VI. Named entity recognition and sequence classification systems with ATCO2 corpora

The ATCO2 corpora can be employed to perform several natural (or spoken) language understanding (NLU) tasks. This can be used to:

- Automatically analyze and interpret the meaning of spoken messages between pilots and ATCos,

- NLU can help to extract important information, such as flight numbers, callsigns, or airport codes,

- Detect end of utterance, or “end pointing detection”,

- Classify ATCo or Pilots based on their messages,

- Perform text-based diarization: https://arxiv.org/abs/2110.05781,

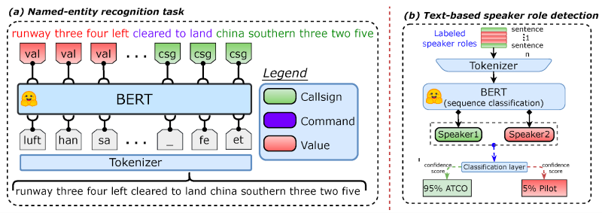

Further information is described in: https://www.mdpi.com/2226-4310/10/10/898, while the Figure below show examples of named-entity recognition and text-based speaker role detection tasks.

Figure. Named entity recognition and text-based speaker role detection tasks

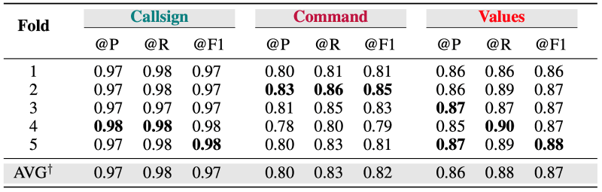

Furthermore, the table below shows the performance on Precision (@P), Recall (@R) and F1-score (@F1) when fine-tuning a BERT model on the named-entity recognition task with the ATCO2-test-set-4h in a 5-fold cross-validation scheme.

Table. Performances of NER with ATCO2-test-set-1h corpus.

LID dataset (V1).

"The ATC LID/ASR evaluation dataset is going to be published at Interspeech 2021. Stay tuned!"

Abstract: Detecting English Speech in the Air Traffic Control Voice Communication | ![]()

LID dataset (V1).

Name: ATCO2-LIDdataset-v1_beta

Description: This dataset was build for development and evaluation of techniques for English and non-English speech classification of ATC data. Note: The dataset is considered as beta version and will be updated in the future (more language pairs will be add and some cleaning/debugging may happen). The dataset consists of language pairs:

CZEN - devel (6.11 hours),

CZEN - eval (6.21 hours)

FREN - devel (2.68 hours),

FREN - eval (3.27 hours),

GEEN - devel English only (5.61 hours),

GEEN - eval (2.41 hours),

EN-AU (Australian English) - eval English only (0.17 hours).

Where possible we split the pair to development and evaluation subsets. We provided audio (wav format), English automatic transcript generated by an ASR and info file with estimated SNR, language and length.

Link to file to download: https://www.replaywell.com/atco2/download/ATCO2-LIDdataset-v1_beta.tgz