ATCO2 at interspeech 2021

Figure 1. Interspeech 2021 will be held between August 30 and September 3, 2021.

This blog post will shortly review each of the three research papers ATCO2 will present on-site during INTERSPEECH. The first paper is related to the language used during the ATC communication.

“Detecting English Speech in the Air Traffic Control Voice Communication” by @ReplayWell

We launched a community platform for collecting the ATC speech world-wide in the ATCO2 project. Filtering out unseen non-English speech is one of the main components in the data processing pipeline. The proposed English Language Detection (ELD) system is based on the embeddings from a Bayesian subspace multinomial model. It is trained on the word confusion network from an ASR system. It is robust, easy to train, and light weighted. We achieved 0.0439 equal-error-rate (EER), a 50% relative reduction as compared to the state-of-the-art acoustic ELD system based on x-vectors, in the in-domain scenario. Further, we achieved an EER of 0.1352, a 33% relative reduction as compared to the acoustic ELD, in the unseen language (out-of-domain) condition. We plan to publish the evaluation dataset from the ATCO2 project.

Further information in the following links:

Teaser: https://www.youtube.com/watch?v=qj42c4qmmAc

Abstract: https://arxiv.org/abs/2104.02332 and,

Paper: https://arxiv.org/abs/2104.02332

“Boosting of Contextual Information in ASR for Air-Traffic Call-Sign Recognition” by @Brno University of Technology

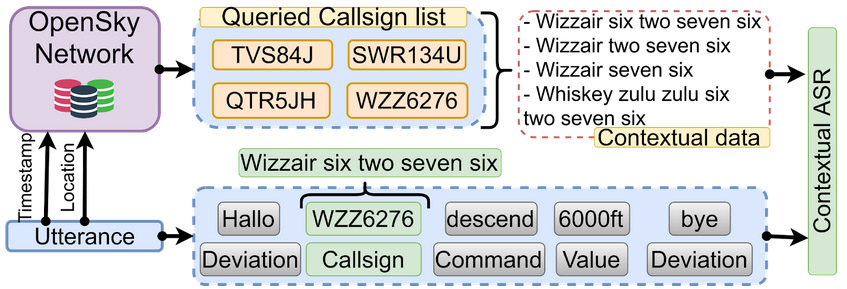

Contextual adaptation is a technique of “suggesting” small snippets of text that are likely to appear in the speech recognition output. The snippets of text are derived from the current “situation” of the speaker, in our project ATCO this is location and time. The location and time are then used to query from OpenSky Network a list of callsigns (airplanes) that match these two inputs.

Applying Automatic Speech Recognition (ASR) to the Air Traffic Control domain (ATC) is difficult due to factors like : noisy radio channels, foreign accents, cross-language code-switching, very fast speech rate, and also situation-dependent vocabulary with many infrequent words. All this combined leads to error rates that make it difficult to apply speech recognition.

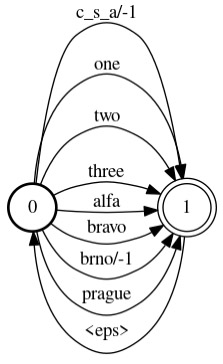

For ASR in ATC, contextual adaptation is beneficial. For instance, we can use a list of airplanes that are nearby. From an airport identity, we can derive local waypoints, local geographical names, phrases in local language etc. It is important that the adaptation is dynamic, i.e. the adaptation snippets of text do change over time. And, the adaptation also has to be light-weight, so it should not require rebuilding the recognition network from scratch. We use the snippets of text by means of Weighted Finite State Transducer (WFST) composition. An example of a biasing FST is shown in Figure 2.

Figure 2. “Toy-example” topology of a biasing WFST graph for boosting the ASR’s recognition network. The boosted callsign is ‘CSA one two three alfa bravo’.

Further information in the following link:

Paper: Boosting of contextual information in ASR for air-traffic call-sign recognition

‘Contextual Semi-Supervised Learning: An Approach to Leverage Air-Surveillance and Untranscribed ATC Data in ASR Systems’ by @Idiap Research Institute

Air traffic management and specifically air-traffic control (ATC) rely mostly on voice communications between Air Traffic Controllers (ATCos) and pilots. In most cases, these voice communications follow a well-defined grammar that could be leveraged in Automatic Speech Recognition (ASR) technologies. The callsign used to address an airplane is an essential part of all ATCo-pilot communications. We propose a two-steps approach to add contextual knowledge during semi-supervised training to reduce the ASR system error rates at recognizing the part of the utterance that contains the callsign. Initially, we represent in a WFST the contextual knowledge (i.e. air-surveillance data) of an ATCo-pilot communication. Then, during Semi-Supervised Learning (SSL) the contextual knowledge is added by second-pass decoding (i.e. lattice rescoring). Results show that 'unseen domains' (e.g. data from airports not present in the supervised training data) are further aided by contextual SSL when compared to standalone SSL. For this task, we introduce the Callsign Word Error Rate (CA-WER) as an evaluation metric, which only assesses ASR performance of the spoken callsign in an utterance. We obtained a 32.1% CA-WER relative improvement applying SSL with an additional 17.5% CA-WER improvement by adding contextual knowledge during SSL on a challenging ATC-based test set gathered from LiveATC.

Figure 3. Process of retrieving a list of callsigns (contextual data) from OpenSky Network. The contextual data is the compendium of all possible verbalized versions of each callsign.

Further information in the following links:

Paper: Contextual Semi-Supervised Learning: An Approach To Leverage Air-Surveillance and Untranscribed ATC Data in ASR Systems