Is it possible to have a cross-accent speech recognizer that works for different airports?

One of the main concerns in automatic speech recognition (ASR) for air-traffic communications is the presence of several non-English accents in the English communications between pilots and air traffic controllers (ATCO). In fact, most of the non-domestic flights use English whereas domestic ones normally rely on the local language, but always following the ICAO guidelines where Arabic, Chinese, French, Russian, and Spanish are also accepted. Currently, voice communication and data links communications are the only way of contact between ATCOs and pilots, where the former is the most widely used and the latter is a non-spoken method mandatory for oceanic messages and limited for some domestic issues.

ASR systems on ATCOs environments inherit increasing complexity due to accents from non-English speakers and different vocabularies across different airports. For instance, previous works have targeted this situation in a ‘divide-and-conquer’ fashion, where an isolated system with different lexicon (non-English words such as ‘Bonjour’, ‘Hallo’ or “Ahoj’) are proposed for each different airport; but this solution is costly and not feasible.

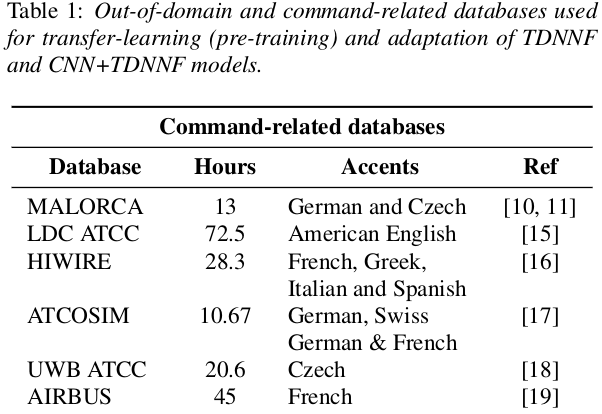

Current state-of-the-art ASR systems have shown that cross-accent recognition can be done by one system. These systems are usually trained on speech data from different airports and have shown overall improvement. We would like to introduce the first results concerning such ASR systems. The following paragraphs convey an exploratory benchmark of several state-of-the-art ASR models trained on more than 170 hours of ATCo speech-data, developed by ATCO2 project. We demonstrate that the cross-accent flaws due to speakers' accents are minimized due to the amount of data, making the system feasible for ATC environments. The developed ASR system achieves an averaged word error rate (WER) of 7.75% across four databases. Also, we tested a system based on Byte-Pair Encoding (BPE) that is capable of recognizing Out-Of-Vocabulary (OOVs) words (which are common on ATCOs-pilots communication), where we achieved 35% relative improvement in WER. The proposed benchmark relies on the databases presented on Table 1, grouped in seven non-English accents.

Experimental setup

In our work, we extracted 7.6 hours of speech data as test sets from the six available databases presented in Table 1. The test sets have 2.5, 2.2 and 1.9 hours for Atcosim, data offered by Prague and Vienna Air National Service Provider (ANSPs), respectively; and 1 hour for Airbus. In order to measure whether the amount of data and the presence of non-English accents (including a variety of non-English words) of the databases influence the training process, we extracted three training sets from the six initial databases. First, ‘Train 1’ is composed of Atcosim, Malorca and UWB ATCC (38.7 hours); secondly, ‘Train 2’ merges Airbus, ATCC USA and Hiwire (137.7 hours) and finally, ‘Tr1+Tr2’ posses all available databases i.e. Train 1 + Train 2.

The word-list for lexicon was assembled from the transcripts of all the ATCOs databases and from some other publicly available resources such as lists with names of airlines, airports, ICAO alphabet, etc. The data preparation, acoustic modeling and decoding are done with the standard recipes of Kaldi speech toolkit (more information could be found in our second blog [link to the second blog]).

First results

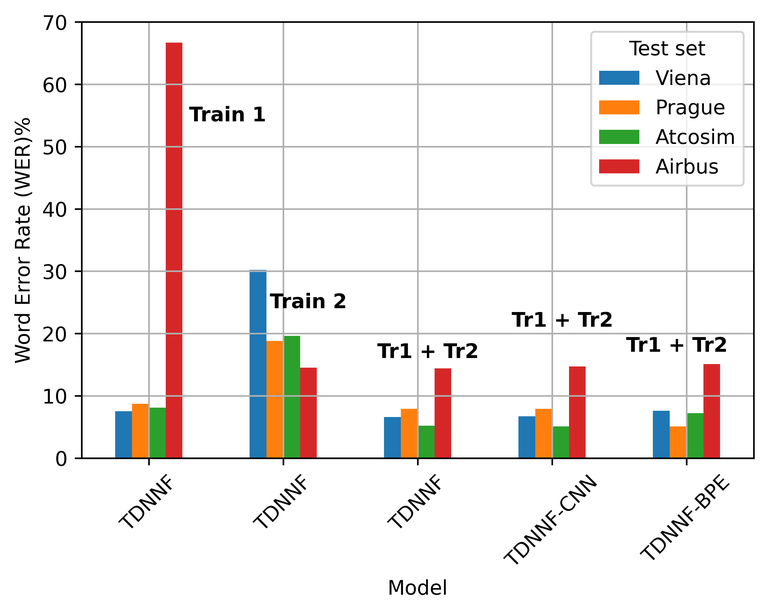

We convey our main results in Figure 1, which presents a bar plot with the results for each of the proposed acoustic models. Here, the ‘TDNNF’ stands as for Factorized Time-Delay Neural-Network, ‘TDNNF-CNN’ is a TDNNF with additional convolutional layers on top, these two models use ‘n-gram’ as Language Models (LMs); in fact, we present results only with 4-gram LM. ‘TDNNF-BPE’ is a sub-word units TDNNF-based model where the language modeling is made of characters or ‘sub-word units’ (BPEs). So far, our main conclusions are:

- ASR for cross-accent ATCO-pilot communications is feasible when sufficient amount of data is used for development.

- For the Vienna test set, the best model yielded 6.6% WER, a new baseline compared to the former project MALORCA.

- For the Prague test set, the best model was TDNNF-BPE with 5.1% WER. We attribute this improvement (compared to the other test sets) to the main idea of sub-word units approach, which is capable of recognize OOVs, in this case, ‘Prague test set” has a 3.3% OOVs rate which is much more than the other test sets (1% OOVs rate).

- For Atcosim test set, the CNN+TDNNF system yielded a new baseline of 5% WER, showing a relative improvement of 3.9% WERs when compared to TDNNF.

- For the Airbus test set, the best model yielded 14.4% WER, but no significant improvement was obtained (less than 0.1% in WER) when using more data (see results with Train 2 and Tr1+Tr2).

Figure 1. Benchmark of three acoustic models (TDNNF, TDNNF-CNN and TDNNF-BPE) with different amounts of training data.

Conclusions and future experiments

In our first study in ATCO2 project, we present a benchmark of different artificial neural networks for ATCo-pilot communications. Our system was able to generalize across several non-English accents making it feasible for deployment (less than 10% WER). We saw that this is one of the first studies employing six air-traffic command-related databases spanning more than 176 hours of speech data that are strongly related in both, phraseology and structure to ATCo-pilot communications; therefore dealing with the burden of lack of databases that many previous studies have quoted. Specifically, we have shown that using in-domain ATC databases, even if not from the same country/airport, the system is capable of producing a “good enough” transcript of a given spoken communication. Also, we reported new baselines for Vienna, Prague and Atcosim test sets. Finally, one of the main outcomes of this research was the results on byte-pair encoding with Prague approach, reaching 5.0% WER. Future research will be focused on semi-supervised training (as thousands of non-transcribed hours of data could be easily gathered).

Link to our first manuscript – more detailed: https://arxiv.org/abs/2006.10304